December 11, 2025 /SemiMedia/ — A rapid build-out of artificial intelligence computing is reshaping expectations across the semiconductor industry, with analysts and major chip suppliers projecting that the global market could reach the $1 trillion threshold before the end of the decade. The acceleration marks one of the fastest structural shifts the sector has seen in its modern history.

AI spending accelerates structural changes across semiconductor categories

Forecasts from AMD, Nvidia, Broadcom and multiple research groups point to a market expansion driven not only by AI accelerators but also by rising demand for memory, high-bandwidth networks and advanced packaging. The industry generated roughly $650 billion in revenue in 2024, but several projections now place the $1 trillion milestone as early as 2028 or 2029.

AMD chief executive Lisa Su recently raised the company’s long-term outlook, saying the AI hardware ecosystem could represent a $1 trillion opportunity by 2030. She expects strong growth across AMD’s data-center products and pushed back against concerns that the sector is entering a speculative bubble.

Chipmakers raise long-term expectations as demand outlook strengthens

Nvidia issued an even broader estimate, telling investors during its fiscal-2026 second-quarter earnings call that the next five years could bring $3 trillion to $4 trillion in cumulative AI-infrastructure spending. The projection covers hyperscale deployments, sovereign AI projects and enterprise clusters.

The surge is reshaping the balance of demand across silicon categories. Analysts expect data-processing chips to account for more than half of total semiconductor revenue by 2026. AI accelerators, a sub-$100 billion market in 2024, are projected to climb to $300 billion to $350 billion by decade’s end. AI server spending is expected to rise from about $140 billion in 2024 to roughly $850 billion by 2030.

The shift has also elevated ASIC development within hyperscale roadmaps. Broadcom expects its custom-silicon business to exceed $100 billion by the end of the decade, following a previously disclosed $10 billion AI-infrastructure order from a major customer widely believed to be OpenAI.

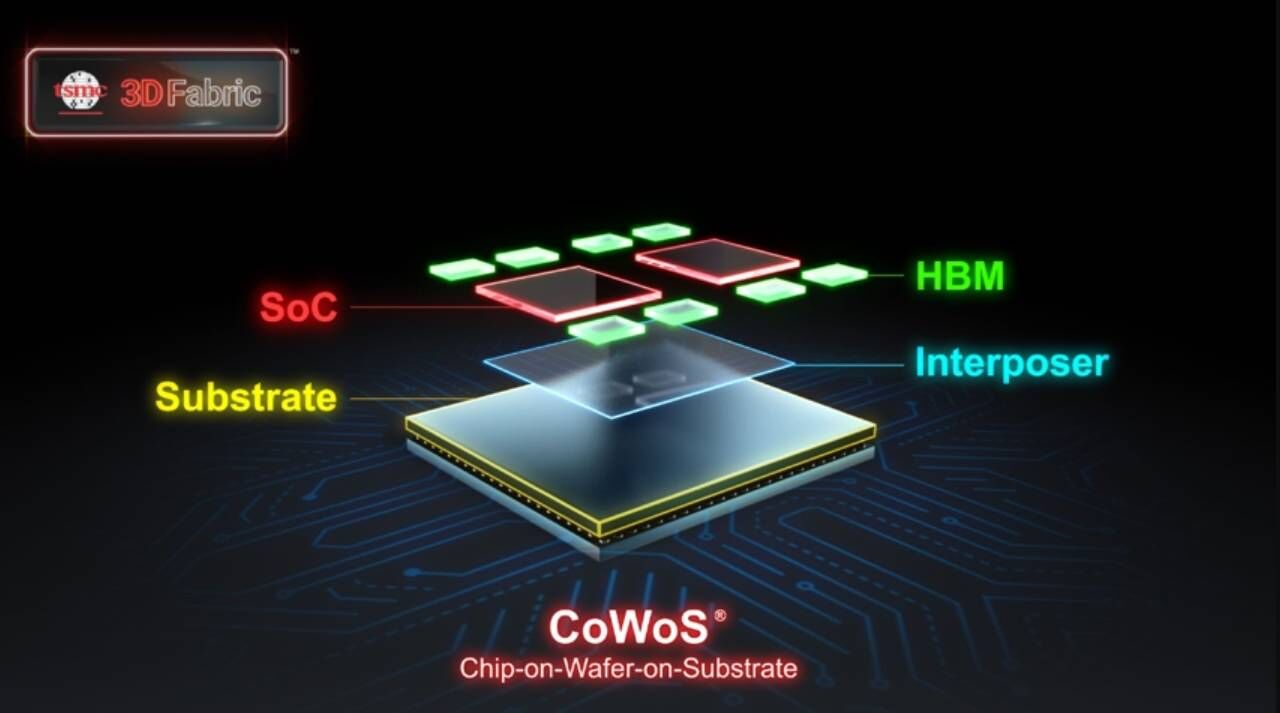

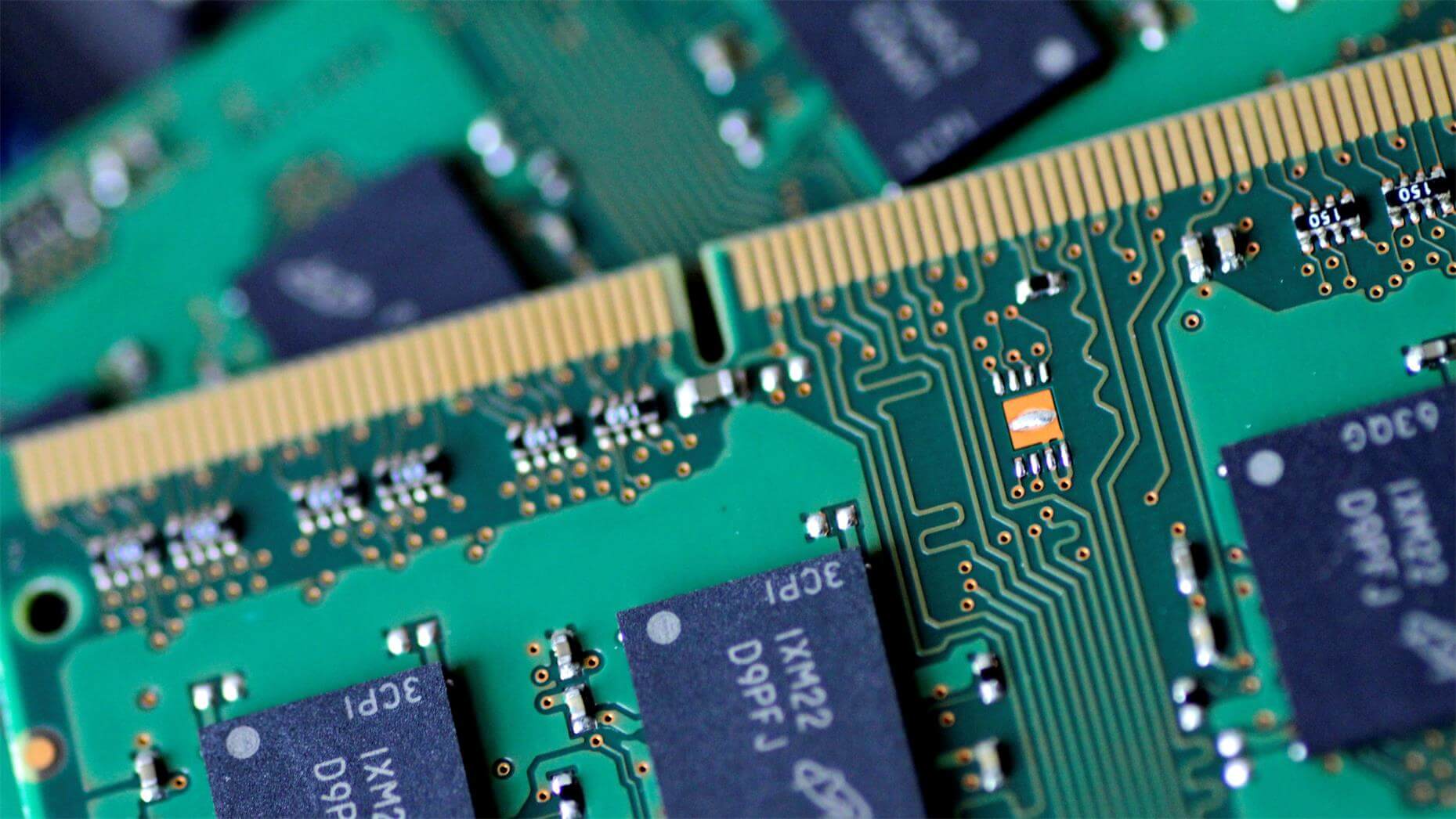

Memory and packaging remain the industry’s tightest bottlenecks. Revenue from high-bandwidth memory is forecast to grow from about $16 billion in 2024 to more than $100 billion by 2030. Each new generation of HBM consumes a larger share of wafer supply, pushing DRAM capacity higher as AI clusters expand. Advanced packaging faces similar pressure, with CoWoS capacity expected to rise more than 60% between late 2025 and the end of 2026.

The AI build-out is creating a new cycle for the semiconductor sector — one defined by system-level demand and long-term supply constraints across memory and packaging technologies.

All Comments (0)