January 29, 2026 /SemiMedia/ — SK hynix is expected to become the exclusive supplier of high bandwidth memory for Microsoft’s latest AI accelerator, Maia 200, according to South Korean media reports.

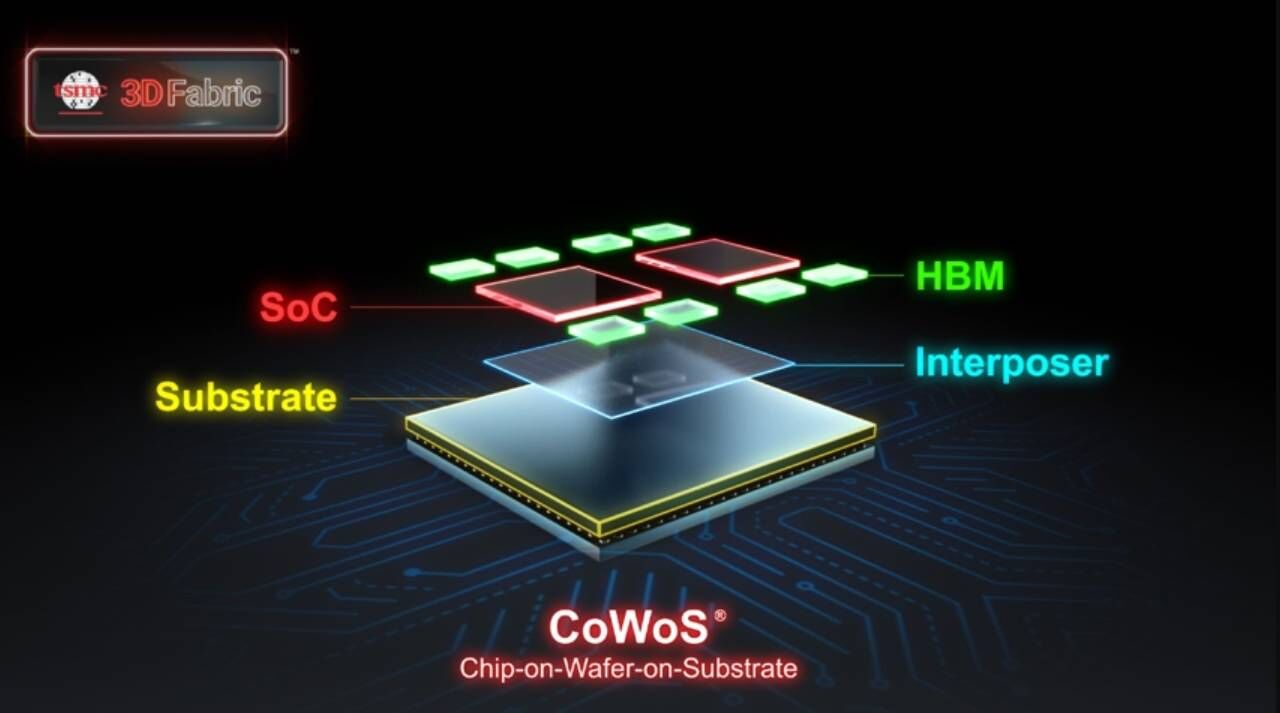

The chip is said to use SK hynix’s HBM3E products, with a total memory capacity of 216GB. The configuration reportedly includes six 12-layer HBM3E stacks supplied by SK hynix. Maia 200 is manufactured using TSMC’s 3-nanometer process and is designed to improve efficiency in AI inference workloads.

Microsoft has already deployed the Maia 200 chip at its data center in Iowa and is expanding deployment to facilities in Arizona as part of its broader in-house AI infrastructure rollout.

Industry sources said the HBM market is gaining new growth drivers beyond demand from Nvidia, as major technology companies accelerate the development of their own AI chips. These custom ASICs are aimed at reducing reliance on general-purpose GPUs. Examples include Google’s seventh-generation Tensor Processing Unit, known as Ironwood, and Amazon Web Services’ third-generation Trainium chip.

UBS analysts noted that SK hynix appears to hold a strong position in supplying HBM to ASIC customers, including Google, Broadcom and Amazon AWS. Demand from cloud service providers is increasingly shaping the structure of the HBM market.

Looking ahead, SK hynix has established mass production capability for its next-generation HBM4 products since September 2025 and has supplied paid samples to Nvidia. The company’s joint HBM optimization program with Nvidia has entered final qualification, with volume production expected to follow.

All Comments (0)