Nvidia’s LPDDR shift adds new strain to DRAM supply

November 21, 2025 /SemiMedia/ — A recent shift in Nvidia’s AI server design is expected to reshape the memory supply chain, according to a report released by Counterpoint Research. The company has begun adopting LPDDR—typically used in smartphones—in place of DDR5 to reduce power consumption in its next-generation AI systems. The move is projected to push server memory prices to double by the end of 2026.

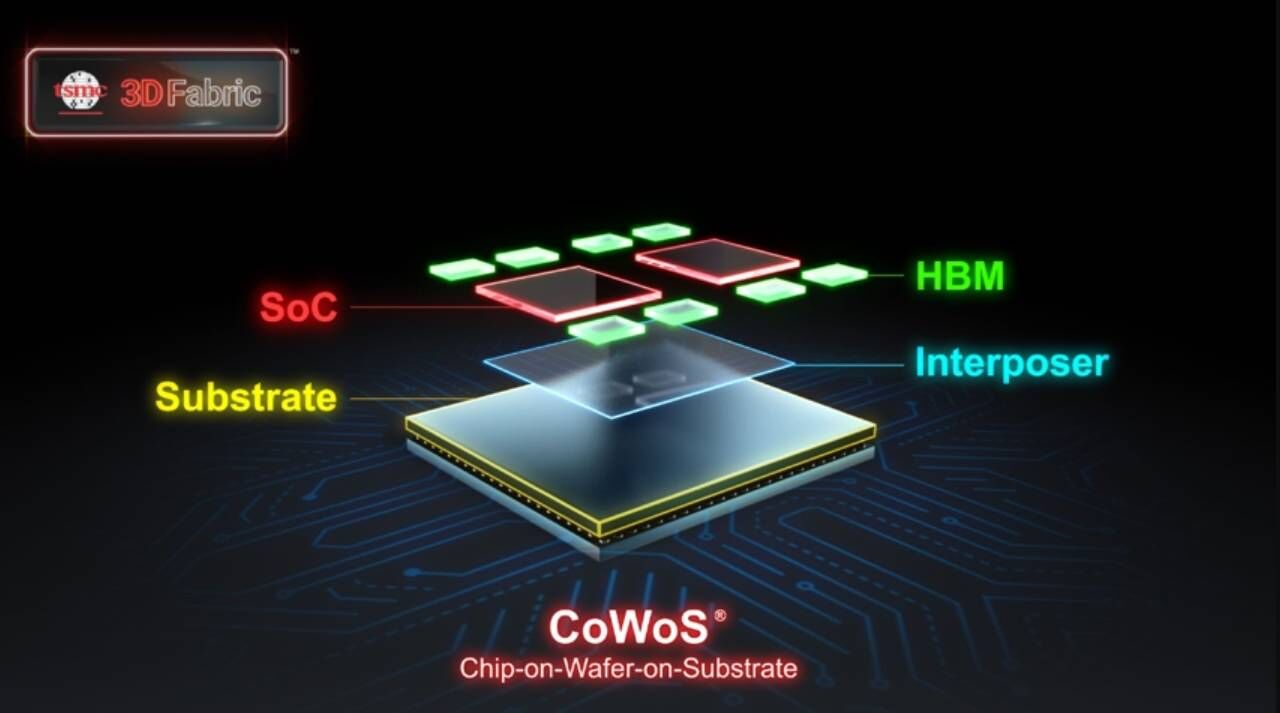

Global electronics suppliers were already facing shortages of conventional DRAM over the past two months as manufacturers prioritized high-end memory production, particularly high-bandwidth memory (HBM), to support accelerating demand from AI data centers. Nvidia’s switch is now creating fresh pressure on LPDDR, a segment not originally built for server-scale volumes.

Suppliers face pressure as AI servers reshape demand

Counterpoint noted that an AI server requires significantly more LPDDR than a smartphone, amplifying the strain on low-power DRAM inventories. Samsung Electronics, SK Hynix and Micron—all of which reduced output of legacy DRAM while ramping HBM production—are now dealing with constrained supply across multiple product lines.

The report warned that suppliers may need to divert more capacity to LPDDR to keep up with Nvidia’s orders, potentially tightening availability of both low-end and advanced DRAM. Nvidia’s shift effectively places the company in the demand bracket of a major smartphone maker, a sudden change that the memory supply chain is not positioned to absorb quickly.

Memory pricing outlook points to sharp increases through 2026

Counterpoint expects server memory prices to double by late 2026, while overall DRAM pricing could rise by roughly 50% by the second quarter of 2026. Such increases would intensify cost pressures on cloud providers and AI developers, whose data center budgets are already stretched due to record spending on GPUs and power upgrades.

All Comments (0)