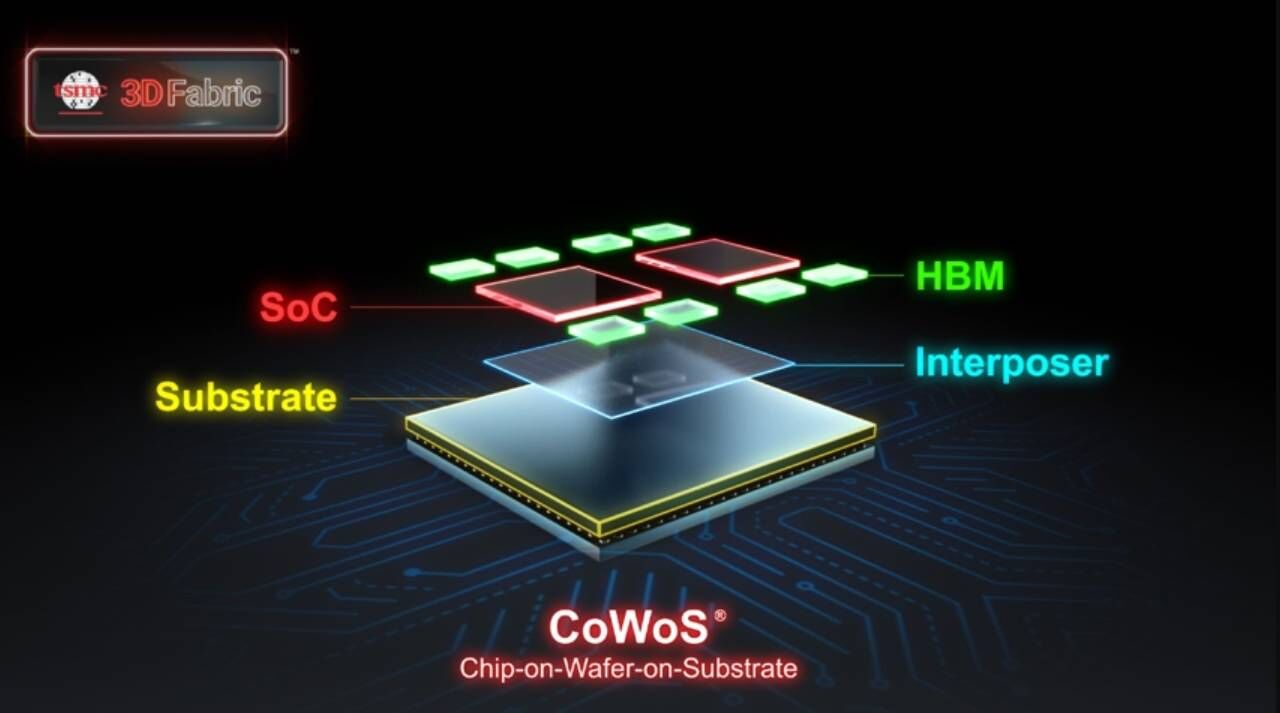

June 17, 2025 /SemiMedia/ — Micron Technology has begun sampling its 12-layer 36GB HBM4 (High Bandwidth Memory) to major customers, the company announced on June 12. Built on its 1-beta DRAM process node, the new HBM4 integrates an advanced 12-high stack design and an embedded memory built-in self-test (MBIST) feature to support next-generation AI workloads.

As demand for generative AI accelerates, memory bandwidth and efficiency have become critical to inference performance. Micron’s HBM4 offers a 2048-bit interface and delivers data rates exceeding 2.0 TB/s—more than a 60% improvement over the previous generation. This enables faster data throughput and improved efficiency in large language models and chain-of-thought inference systems.

“Micron’s HBM4 delivers exceptional performance, higher bandwidth, and industry-leading energy efficiency,” said Raj Narasimhan, senior vice president and general manager of Micron’s Cloud AI Storage division. “Building on our HBM3E milestone, we’re aligning our HBM4 production schedule with customers’ next-generation AI platforms to ensure smooth integration and timely scale-up for market demand.”

All Comments (0)